It all started, as most tech disasters do, with a seductive whisper. “Just describe your infrastructure with YAML,” Kubernetes cooed. “It’ll be easy,” it said. And we, like fools in a love story, believed it.

At first, it was a beautiful romance. A few files, a handful of lines. It was elegant. It was declarative. It was… manageable. But entropy, the nosy neighbor of every DevOps team, had other plans. Our neat little garden of YAML files soon mutated into a sprawling, untamed jungle of configuration.

We had 12 microservices jostling for position, spread across 4 distinct environments, each with its own personality quirks and dark secrets. Before we knew it, we weren’t writing infrastructure anymore; we were co-authoring a Byzantine epic in a language seemingly designed by bureaucrats with a fetish for whitespace.

The question that broke the camel’s back

The day of reckoning didn’t arrive with a server explosion or a database crash. It came with a question. A question that landed in our team’s Slack channel with the subtlety of a dropped anvil, courtesy of a junior engineer who hadn’t yet learned to fear the YAML gods.

“Hey, why does our staging pod have a different CPU limit than prod?”

Silence. A deep, heavy, digital silence. The kind of silence that screams, “Nobody has a clue.”

What followed was an archaeological dig into the fossil record of our own repository. We unearthed layers of abstractions we had so cleverly built, peeling them back one by one. The trail led us through a hellish labyrinth:

- We started at deployment.yaml, the supposed source of all truth.

- That led us to values.yaml, the theoretical source of all truth.

- From there, we spelunked into values.staging.yaml, where truth began to feel… relative.

- We stumbled upon a dusty patch-cpu-emergency.yaml, a fossil from a long-forgotten crisis.

- Then we navigated the dark forest of custom/kustomize/base/deployment-overlay.yaml.

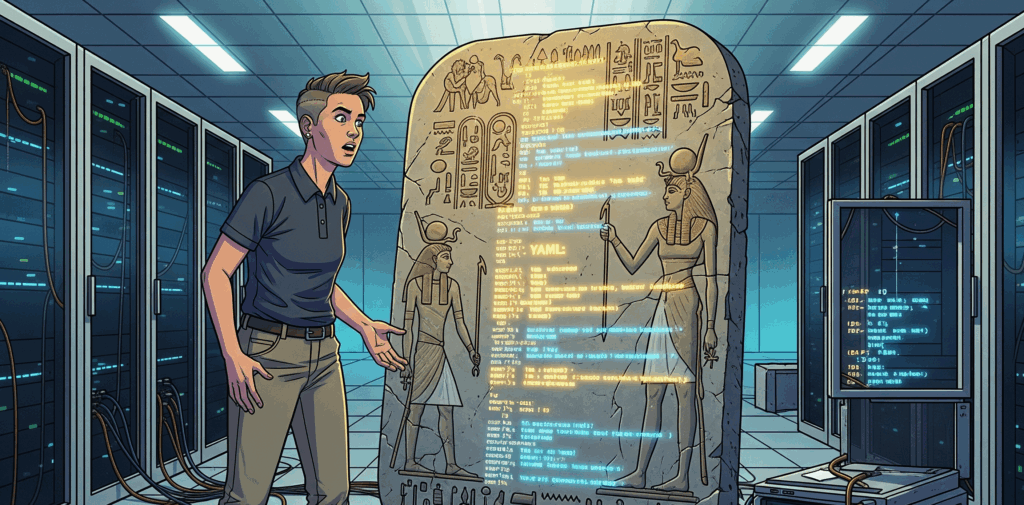

- And finally, we reached the Rosetta Stone of our chaos: an argocd-app-of-apps.yaml.

The revelation was as horrifying as finding a pineapple on a pizza: we had declared the same damn value six times, in three different formats, using two tools that secretly despised each other. We weren’t managing the configuration. We were performing a strange, elaborate ritual and hoping the servers would be pleased.

That’s when we knew. This wasn’t a configuration problem. It was an existential crisis. We were, without a doubt, deep in YAML Hell.

The tools that promised heaven and delivered purgatory

Let’s talk about the “friends” who were supposed to help. These tools promised to be our saviors, but without discipline, they just dug our hole deeper.

Helm, the chaotic magician

Helm is like a powerful but slightly drunk magician. When it works, it pulls a rabbit out of a hat. When it doesn’t, it sets the hat on fire, and the rabbit runs off with your wallet.

The Promise: Templating! Variables! A whole ecosystem of charts!

The Reality: Debugging becomes a form of self-torment that involves piping helm template into grep and praying. You end up with conditionals inside your templates that look like this:

image:

repository: {{ .Values.image.repository | quote }}

tag: {{ .Values.image.tag | default .Chart.AppVersion }}

pullPolicy: {{ .Values.image.pullPolicy | default "IfNotPresent" }}This looks innocent enough. But then someone forgets to pass image.tag for a specific environment, and you silently deploy :latest to production on a Friday afternoon. Beautiful.

Kustomize the master of patches

Kustomize is the “sensible” one. It’s built into kubectl. It promises clean, layered configurations. It’s like organizing your Tupperware drawer with labels.

The Promise: A clean base and tidy overlays for each environment.

The Reality: Your patch files quickly become a mystery box. You see this in your kustomization.yaml:

patchesStrategicMerge:

- increase-replica-count.yaml

- add-resource-limits.yaml

- disable-service-monitor.yamlWhere are these files? What do they change? Why does disable-service-monitor.yaml only apply to the dev environment? Good luck, detective. You’ll need it.

ArgoCD, the all-seeing eye (that sometimes blinks)

GitOps is the dream. Your Git repo is the single source of truth. No more clicking around in a UI. ArgoCD or Flux will make it so.

The Promise: Declarative, automated sync from Git to cluster. Rollbacks are just a git revert away.

The Reality: If your Git repo is a dumpster fire of conflicting YAML, ArgoCD will happily, dutifully, and relentlessly sync that dumpster fire to production. It won’t stop you. One bad merge, and you’ve automated a catastrophe.

Our escape from YAML hell was a five-step sanity plan

We knew we couldn’t burn it all down. We had to tame the beast. So, we gathered the team, drew a line in the sand, and created five commandments for configuration sanity.

1. We built a sane repo structure

The first step was to stop the guesswork. We enforced a simple, predictable layout for every single service.

├── base/

│ ├── deployment.yaml

│ ├── service.yaml

│ └── configmap.yaml

└── overlays/

├── dev/

│ ├── kustomization.yaml

│ └── values.yaml

├── staging/

│ ├── kustomization.yaml

│ └── values.yaml

└── prod/

├── kustomization.yaml

└── values.yamlThis simple change eliminated 80% of the “wait, which file do I edit?” conversations.

2. One source of truth for values

This was a sacred vow. Each environment gets one values.yaml file. That’s it. We purged the heretics:

- values-prod-final.v2.override.yaml

- backup-of-values.yaml

- donotdelete-temp-config.yaml

If a value wasn’t in the designated values.yaml for that environment, it didn’t exist. Period.

3. We stopped mixing Helm and Kustomize

You have to pick a side. We made a rule: if a service requires complex templating logic, use Helm. If it primarily needs simple overlays (like changing replica counts or image tags per environment), use Kustomize. Using both on the same service is like trying to write a sentence in two languages at once. It’s a recipe for suffering.

4. We render everything before deploying

Trust, but verify. We added a mandatory step in our CI pipeline to render the final YAML before it ever touches the cluster.

# For Helm + Kustomize setups

helm template . --values overlays/prod/values.yaml | \

kustomize build | \

kubeval -This simple script does three magical things:

- It validates that the output is syntactically correct YAML.

- It lets us see exactly what is about to be applied.

- It has completely eliminated the “well, that’s not what I expected” class of production incidents.

5. We built a simple config CLI

To make the right way the easy way, we built a small internal CLI tool. Now, instead of navigating the YAML jungle, an engineer simply runs:

$ ops-cli config generate --app=user-service --env=prodThis tool:

- Pulls the correct base templates and overlay values.

- Renders the final, glorious YAML.

- Validates it against our policies.

- Shows the developer a diff of what will change in the cluster.

- Saves lives and prevents hair loss.

YAML is now a tool again, not a trap.

The afterlife is peaceful

YAML didn’t ruin our lives. We did, by refusing to treat it with the respect it demands. Templating gives you incredible power, but with great power comes great responsibility… and redundancy, and confusion, and pull requests with 10,000 lines of whitespace changes. Now, we treat our YAML like we treat our application code. We lint it. We test it. We render it. And most importantly, we’ve built a system that makes it difficult to do the wrong thing. It’s the institutional equivalent of putting childproof locks on the kitchen cabinets. A determined toddler could probably still get to the cleaning supplies, but it would require a conscious, frustrating effort. Our system doesn’t make us smarter; it just makes our inevitable moments of human fallibility less catastrophic. It’s the guardrail on the scenic mountain road of configuration. You can still drive off the cliff, but you have to really mean it. Our infrastructure is no longer a hieroglyphic. It’s just… configuration. And the resulting boredom is a beautiful thing.