Amazon API Gateway is a managed service that allows developers to create, publish, maintain, monitor, and secure APIs at scale. Imagine you’re building an application where different types of clients need to interact with backend services, API Gateway steps in to bridge that communication effectively. From serverless functions, like AWS Lambda, to Java microservices running on Amazon EC2, API Gateway helps unify access and security, all while optimizing scalability and cost. It enables you to streamline development by providing a standardized interface to connect different architecture components, thereby reducing complexity and improving maintainability.

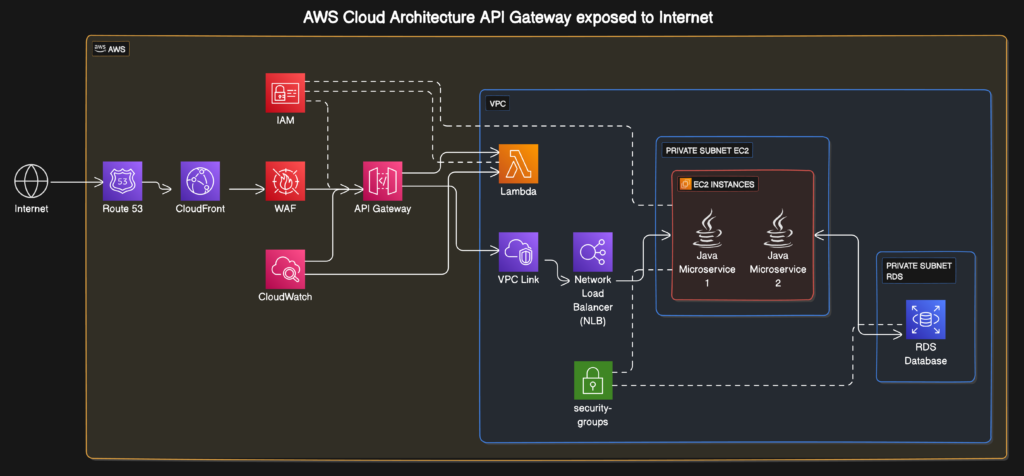

In this guide, I’ll walk you through an architecture that securely exposes an API using AWS services, such as API Gateway, CloudFront, Lambda, Network Load Balancers (NLB), and others. We’ll detail each step, referencing a diagram to illustrate how all these components work together harmoniously. I hope to make this information as approachable as possible, like a conversation over coffee, where I explain concepts clearly, even if you’re new to AWS services. By the end of this guide, you should have a solid understanding of how these pieces come together to create a secure, scalable API.

Amazon API Gateway Basics

API Gateway allows you to create APIs that can serve as a front door to your backend services. Whether you have Lambda functions executing your business logic or traditional microservices running on EC2 instances, API Gateway manages traffic, secures APIs, and integrates well with AWS’s ecosystem, ensuring high availability and scalability. It acts as the centralized gateway for all the external requests coming to your application and provides a seamless way to manage those requests without overloading your backend.

API Gateway helps you manage the entire lifecycle of your API. Imagine it as the receptionist of a large office building; it controls who comes in, directs them to the appropriate room, and even handles security checks. Your backend services, whether they are Lambda functions or Java-based microservices, don’t have to worry about authentication, logging, or rate limiting, API Gateway takes care of it all. This allows your development team to focus on the core functionality without worrying about the overhead of managing all these security and operational concerns.

The AWS Architecture to Expose an API

Let’s explore the architecture itself. The diagram accompanying this article details an architecture that effectively exposes an API to the internet, utilizing multiple AWS services to create a robust and secure environment. Each component in the architecture has a specific role, and understanding these roles will help you see how they work together to create a seamless user experience.

1. Entry Point via Amazon Route 53 and CloudFront

The entry point for users starts with Amazon Route 53, which provides domain name resolution. It ensures that your custom domain is easily discoverable by mapping it to your API Gateway endpoint. Once resolved, requests are routed through Amazon CloudFront, a content delivery network (CDN) service. This adds benefits like caching and content delivery optimization, reducing latency for clients globally. The caching provided by CloudFront can significantly reduce the number of calls to your API Gateway, which also helps in cost savings by reducing the usage of downstream resources.

Think of CloudFront as a system of shortcuts. When someone tries to access your API from the other side of the globe, they hit a CloudFront edge location, which reduces travel time and ensures a faster response, saving both your API and the user precious milliseconds. In addition, CloudFront adds a layer of security by keeping certain attacks from reaching your API Gateway, since it can use geo-restriction and SSL/TLS encryption to protect your data.

2. Security with AWS WAF and API Gateway

The next layer is AWS WAF (Web Application Firewall). WAF is the gatekeeper that examines incoming traffic to ensure it’s safe. It prevents attacks, such as SQL injection or cross-site scripting, safeguarding your API from harmful traffic. WAF rules can be configured to block, allow, or count requests based on customizable conditions, such as IP addresses, HTTP headers, or request bodies.

From there, the requests arrive at API Gateway. The API Gateway processes the incoming request, applying rate limiting, authentication, and integrating seamlessly with other AWS services. Here, you’re ensuring that only authorized requests reach your backend. It also allows you to throttle requests, ensuring your backend services do not get overwhelmed during a traffic spike.

AWS IAM (Identity and Access Management) also comes into play, managing who has permissions to access specific components. IAM policies control which entities can invoke Lambda functions or communicate with the Java microservices hosted on EC2 instances. The EC2 instances must use roles defined in IAM to securely access the RDS database, ensuring that only authorized entities can connect. By assigning specific roles, you can tightly control which services or individuals can interact with the backend, minimizing the potential for unauthorized access.

3. Lambda Functions and EC2 Microservices as Backend Services

API Gateway is versatile. In this architecture, you’ll see two main paths from API Gateway:

- AWS Lambda: If your service logic is serverless, AWS Lambda handles those operations. For example, small functions that perform specific tasks can be triggered directly. Lambda provides scalability without the hassle of managing infrastructure. Lambda is ideal for event-driven applications, where you need to process incoming requests on-demand without needing a dedicated server. Each function runs in an isolated environment, which means even if there’s an issue with one execution, it doesn’t affect others.

- VPC Link to EC2 Instances: When dealing with microservices hosted in a VPC (Virtual Private Cloud), VPC Link is used to securely connect the API Gateway to those services. In this architecture, the VPC Link connects to a Network Load Balancer (NLB). The NLB then distributes traffic to Java microservices running on EC2 instances within a private subnet. This layer provides isolation, ensuring that the microservices aren’t directly exposed to the internet. The use of VPC Link and NLB ensures that all communication between API Gateway and EC2 instances remains within the secure boundaries of the AWS network, enhancing security.

Think of the NLB as the traffic officer. It receives all the cars (requests) from the VPC Link and directs them to one of the EC2 instances (Java microservices), making sure none of them get overwhelmed. This ensures that your backend can handle requests efficiently, even during peak load times, by spreading the requests across multiple instances.

4. A RDS Database for Data Persistence

The backend services running on EC2 interact with an Amazon RDS (Relational Database Service) instance. The RDS instance sits within another private subnet in the VPC, providing a managed database solution that scales according to the demands of your application. It’s isolated from the public internet, with access controlled strictly by security groups to ensure that only your EC2 microservices can communicate with it. The subnet is private, meaning it has no direct route to the internet, and only the specific port used by the database (typically port 3306 for MySQL, for example) is open to allow inbound traffic from authorized EC2 instances. This minimizes the risk of unauthorized access or potential attacks.

Moreover, the IAM roles assigned to the EC2 instances ensure that each request made to the RDS database is authenticated securely. The controlled access combined with the private subnet adds a defense-in-depth approach, significantly enhancing the security posture of the application. This setup means that even if an attacker were to gain access to other parts of the infrastructure, reaching the RDS database would still be extremely challenging due to the multiple layers of protection.

5. Monitoring with AWS CloudWatch

Lastly, everything needs to be monitored. AWS CloudWatch is used to track metrics and log information across API Gateway, Lambda, and the EC2 instances. CloudWatch helps you understand how the system is behaving, allows you to define alarms for anything out of the ordinary, and ensures that you always have insight into your services’ health. By setting up CloudWatch alarms, you can automatically get notifications if something isn’t performing as expected, allowing you to respond quickly and ensure high availability.

Security groups add a further layer of control, dictating what traffic is allowed in and out of the private subnets. These configurations ensure that only legitimate requests are allowed to reach the EC2 instances or interact with the RDS database. By fine-tuning the security group rules, you can restrict access further, allowing only specific IP ranges or VPC endpoints to communicate with your services.

Final Thoughts and Recommendations

Here are two important considerations to keep in mind as you design your architecture:

- Clarifying the Connection Between API Gateway and VPC Link: It’s essential to understand that the connection from API Gateway to VPC Link is designed specifically for securely communicating with services residing inside the VPC. This is different from invoking Lambda functions directly, which are handled outside the VPC context.

- Balancing Security and Simplicity: The architecture presented here represents a foundational approach to securely exposing an API. It’s valuable to highlight additional security options, such as implementing Network ACLs (NACLs) or creating more granular Security Groups, as a way to enhance the balance between accessibility and security. This approach allows you to keep the initial design straightforward while providing paths for more sophisticated security as requirements evolve.

I hope this guide has demystified the architecture for you. Think of it like a well-oiled machine or even a kitchen during the dinner rush. Every part has a job, API Gateway is the head chef calling out orders, CloudFront is like the waiter running dishes out to customers quickly, and WAF is the security guard keeping everything safe. When each part knows its role and plays it well, the whole restaurant runs smoothly. Understanding these concepts will not only help you build better applications but will also give you the confidence to scale and secure your services, just like a seasoned chef confidently managing a busy kitchen.