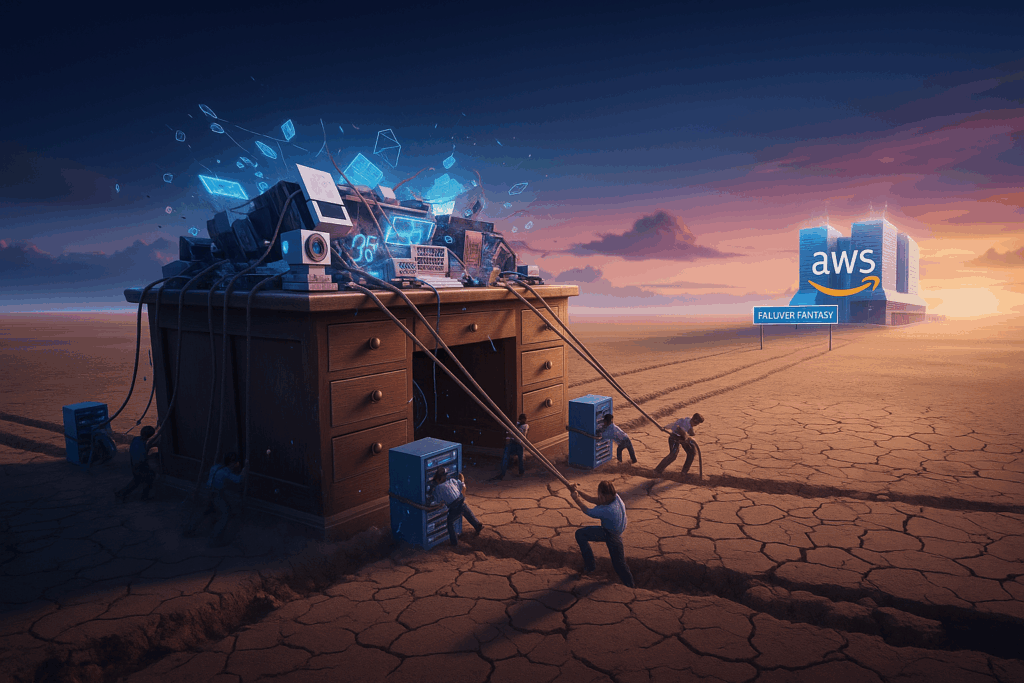

The recent failure showed us the truth: your data is stuck, and active-active failover is a fantasy for 99% of us. Here’s a pragmatic high-availability strategy that actually works.

Well, that was an intense week.

When the great AWS outage of October 2025 hit, I did what every senior IT person does: I grabbed my largest coffee mug, opened our monitoring dashboard, and settled in to watch the world burn. us-east-1, the internet’s stubbornly persistent center of gravity, was having what you’d call a very bad day.

And just like clockwork, as the post-mortems rolled in, the old, tired refrain started up on social media and in Slack: “This is why you must be multi-region.”

I’m going to tell you the truth that vendors, conference speakers, and that one overly enthusiastic junior dev on your team won’t. For 99% of companies, “multi-region” is a lie.

It’s an expensive, complex, and dangerous myth sold as a silver bullet. And the recent outage just proved it.

The “Just Be Multi-Region” fantasy

On paper, it sounds so simple. It’s a lullaby for VPs.

You just run your app in us-east-1 (Virginia) and us-west-2 (Oregon). You put a shiny global load balancer in front, and if Virginia decides to spontaneously become an underwater volcano, poof! All your traffic seamlessly fails over to Oregon. Zero downtime. The SREs are heroes. Champagne for everyone.

This is a fantasy.

It’s a fantasy that costs millions of dollars and lures development teams into a labyrinth of complexity they will never escape. I’ve spent my career building systems that need to stay online. I’ve sat in the planning meetings and priced out the “real” cost. Let me tell you, true active-active multi-region isn’t just “hard”; it’s a completely different class of engineering.

And it’s one that your company almost certainly doesn’t need.

The three killers of Multi-Region dreams

It’s not the application servers. Spinning up EC2 instances or containers in another region is the easy part. That’s what we have Infrastructure as Code for. Any intern can do that.

The problem isn’t the compute. The problem is, and always has been, the data.

Killer 1: Data has gravity, and it’s a jerk

This is the single most important concept in cloud architecture. Data has gravity.

Your application code is a PDF. It’s stateless and lightweight. You can email it, copy it, and run it anywhere. Your 10TB PostgreSQL database is not a PDF. It’s the 300-pound antique oak desk the computer is sitting on. You can’t just “seamlessly fail it over” to another continent.

To have a true seamless failover, your data must be available in the second region at the exact moment of the failure. This means you need synchronous, real-time replication across thousands of miles.

Guess what that does to your write performance? It’s like trying to have a conversation with someone on Mars. The latency of a round-trip from Virginia to Oregon adds hundreds of milliseconds to every single database write. The application becomes unusably slow. Every time a user clicks “save,” they have to wait for a photon to physically travel across the country and back. Your users will hate it.

“Okay,” you say, “we’ll use asynchronous replication!”

Great. Now when us-east-1 fails, you’ve lost the last 5 minutes of data. Every transaction, every new user sign-up, every shopping cart order. Vanished. You’ve traded a “Recovery Time” of zero for a “Data Loss” that is completely unacceptable. Go explain to the finance department that you purposefully designed a system that throws away the most recent customer orders. I’ll wait.

This is the trap. Your compute is portable; your data is anchored.

Killer 2: The astronomical cost

I was on a project once where the CTO, fresh from a vendor conference, wanted a full active-active multi-region setup. We scoped it.

Running 2x the servers was fine. The real cost was the inter-region data transfer.

AWS (and all cloud providers) charge an absolute fortune for data moving between their regions. It’s the “hotel minibar” of cloud services. Every single byte your database replicates, every log, every file transfer… cha-ching.

Our projected bill for the data replication and the specialized services (like Aurora Global Databases or DynamoDB Global Tables) was three times the cost of the entire rest of the infrastructure.

You are paying a massive premium for a fleet of servers, databases, and network gateways that are sitting idle 99.9% of the time. It’s like buying the world’s most expensive gym membership and only going once every five years to “test” it. It’s an insurance policy so expensive, you can’t afford the disaster it’s meant to protect you from.

Killer 3: The crushing complexity

A multi-region system isn’t just two copies of your app. It’s a brand new, highly complex, slightly psychotic distributed system that you now have to feed and care for.

You now have to solve problems you never even thought about:

- Global DNS failover: How does Route 53 know a region is down? Health checks fail. But what if the health check itself fails? What if the health check thinks Virginia is fine, but it’s just hallucinating?

- Data write conflicts: This is the fun part. What if a user in New York (writing to us-east-1) and a user in California (writing to us-west-2) update the same record at the same time? Welcome to the world of split-brain. Who wins? Nobody. You now have two “canonical” truths, and your database is having an existential crisis. Your job just went from “Cloud Architect” to “Data Therapist.”

- Testing: How do you even test a full regional failover? Do you have a big red “Kill Virginia” button? Are you sure you know what will happen when you press it? On a Tuesday afternoon? I didn’t think so.

You haven’t just doubled your infrastructure; you’ve 10x’d your architectural complexity.

But we have Kubernetes because we are Cloud Native

This was my favorite part of the October 2025 outage.

I saw so many teams that thought Kubernetes would save them. They had their fancy federated K8s clusters spanning multiple regions, YAML files as far as the eye could see.

And they still went down.

Why? Because Kubernetes doesn’t solve data gravity!

Your K8s cluster in us-west-2 dutifully spun up all your application pods. They woke up, stretched, and immediately started screaming: “WHERE IS MY DISK?!”

Your persistent volumes (PVs) are backed by EBS or EFS. That ‘E’ stands for ‘Elastic,’ not ‘Extradimensional.’ That disk is physically, stubbornly, regionally attached to Virginia. Your pods in Oregon can’t mount a disk that lives 3,000 miles away.

Unless you’ve invested in another layer of incredibly complex, eye-wateringly expensive storage replication software, your “cloud-native” K8s cluster was just a collection of very expensive, very confused applications shouting into the void for a database that was currently offline.

A pragmatic high availability strategy that actually works

So if multi-region is a lie, what do we do? Just give up? Go home? Take up farming?

Yes. You accept some downtime.

You stop chasing the “five nines” (99.999%) myth and start being honest with the business. Your goal is not “zero downtime.” Your goal is a tested and predictable recovery.

Here is the sane strategy.

1. Embrace Multi-AZ (The real HA)

This is what AWS actually means by “high availability.” Run your application across multiple Availability Zones (AZs) within a single region. An AZ is a physically separate data center. us-east-1a and us-east-1b are miles apart, with different power and network.

This is like having a backup generator for your house. Multi-region is like building an identical, fully-furnished duplicate house in another city just in case a meteor hits your first one.

Use a Multi-AZ RDS instance. Use an Auto Scaling Group that spans AZs. This protects you from 99% of common failures: a server rack dying, a network switch failing, or a construction crew cutting a fiber line. This should be your default. It’s cheap, it’s easy, and it works.

2. Focus on RTO and RPO

Stop talking about “nines” and start talking about two simple numbers:

- RTO (Recovery Time Objective): How fast do we need to be back up?

- RPO (Recovery Point Objective): How much data can we afford to lose?

Get a real answer from the business, not a fantasy. Is a 4-hour RTO and a 15-minute RPO acceptable? For almost everyone, the answer is yes.

3. Build a “Warm Standby” (The sane DR)

This is the strategy that actually works. It’s the “fire drill” plan, not the “build a duplicate city” plan.

- Infrastructure: Your entire infrastructure is defined in Terraform or CloudFormation. You can rebuild it from scratch in any region with a single command.

- Data: You take regular snapshots of your database (e.g., every 15 minutes) and automatically copy them to your disaster recovery region (us-west-2).

- The plan: When us-east-1 dies, you declare a disaster. The on-call engineer runs the “Deploy-to-DR” script.

Here’s a taste of what that “sane” infrastructure-as-code looks like. You’re not paying for two of everything. You’re paying for a blueprint and a backup.

# main.tf (in your primary region module)

# This is just a normal server

resource "aws_instance" "app_server" {

count = 3 # Your normal production count

ami = "ami-0abcdef123456"

instance_type = "t3.large"

# ... other config

}

# dr.tf (in your DR region module)

# This server doesn't even exist... until you need it.

resource "aws_instance" "dr_app_server" {

# This is the magic.

# This resource is "off" by default (count = 0).

# You flip one variable (is_disaster = true) to build it.

count = var.is_disaster ? 3 : 0

provider = aws.dr_region # Pointing to us-west-2

ami = "ami-0abcdef123456" # Same AMI

instance_type = "t3.large"

# ... other config

}

resource "aws_db_instance" "dr_database" {

count = var.is_disaster ? 1 : 0

provider = aws.dr_region

# Here it is: You build the new DB from the

# latest snapshot you've been copying over.

replicate_source_db = var.latest_db_snapshot_arn

instance_class = "db.r5.large"

# ... other config

}You flip a single DNS record in Route 53 to point all traffic to the new load balancer in us-west-2.

Yes, you have downtime (your RTO of 2–4 hours). Yes, you might lose 15 minutes of data (your RPO).

But here’s the beautiful part: it actually works, it’s testable, and it costs a tiny fraction of an active-active setup.

The AWS outage in October 2025 wasn’t a lesson in the need for multi-region. It was a global, public, costly lesson in humility. It was a reminder to stop chasing mythical architectures that look good on a conference whiteboard and focus on building resilient, recoverable systems.

So, stop feeling guilty because your setup doesn’t span three continents. You’re not lazy; you’re pragmatic. You’re the sane one in a room full of people passionately arguing about the best way to build a teleporter for that 300-pound antique oak desk.

Let them have their complex, split-brain, data-therapy sessions. You’ve chosen a boring, reliable, testable “warm standby.” You’ve chosen to get some sleep.