As we venture into the realm of serverless computing, Lambda stands out, not merely as a service, but as a transformative force that propels businesses towards unprecedented efficiency and agility.

At its core, AWS Lambda is about simplification and empowerment. By abstracting the complexities of server management, it allows developers, DevOps engineers, and cloud architects to focus on what they do best—crafting code that adds real value. Lambda takes care of the rest, from provisioning and scaling to patching and monitoring, encapsulating these tasks within a seamless, managed environment. This shift is not just incremental; it’s revolutionary. It means that launching a new feature, responding to a sudden spike in traffic, or iterating rapidly on feedback no longer requires a herculean effort or intricate orchestration of resources.

For the DevOps community and Cloud Architects, AWS Lambda isn’t just another tool in the toolbox. It’s the cornerstone that supports a more resilient, responsive, and cost-effective architecture. It’s about writing the future of cloud computing—one function at a time. As we delve deeper into the world of AWS Lambda and explore its top use cases, let’s keep in mind this vision of a serverless future, where the potential of every line of code is fully realized, and the operational overhead is no longer a barrier to innovation.

Use Case 1: Seamless API Gateway Integration – Unlocking Scalable and Secure Serverless Interactions

As we wade into the vast ocean of AWS Lambda’s capabilities, the first use case that surfaces is its seamless integration with API Gateway. This powerful combination is akin to a seasoned duo in a relay race, where API Gateway takes the baton of client requests and elegantly passes it to Lambda for the heavy lifting.

Imagine API Gateway as the vigilant gatekeeper of a fortress. It stands guard at the entrance, meticulously inspecting the credentials of each visitor — in this case, the incoming HTTP requests. Only those with the proper authentication are allowed to pass through its gates. Once a request is deemed worthy, API Gateway ushers it into the inner sanctum of AWS Lambda, the engine room where the logic resides.

Here, within the walls of Lambda, the magic unfolds. The functions spring into action, executing the code that breathes life into serverless applications. The beauty of this integration lies in its robustness; developers are empowered to construct HTTP endpoints that are not only secure but can scale effortlessly with the ebb and flow of demand. It’s as if the gatekeeper can instantly clone itself to manage an unexpected throng of visitors, ensuring that each one is attended to with the same efficiency and security as when the gates first opened.

To put this into perspective, consider a digital ticketing system for a highly anticipated concert. API Gateway ensures that every ticket purchase request is legitimate and manages the influx of eager fans trying to secure their seats. Meanwhile, Lambda processes these requests, confirming seats, issuing tickets, and handling payment transactions with precision and without the need for any infrastructure concerns.

This synergy between AWS Lambda and API Gateway encapsulates the essence of serverless architecture — delivering scalable, reliable, and secure applications that stand ready to serve at a moment’s notice, without the burdens traditionally associated with server management.

Use Case 2: Serverless Cron Jobs – The Art of Automation

Serverless cron jobs are the threads that keep the pattern consistent and vibrant. These automated tasks, akin to a skilled orchestra conducting itself, ensure that the music of your digital operations never misses a beat. Serverless cron jobs embody the principle of ‘set and forget’, where you can schedule routine tasks to run without the need to manage or monitor servers constantly.

Take, for instance, the AWS CloudWatch Events service — the reliable timekeeper in the world of AWS. It acts like an impeccably accurate clock, capable of triggering Lambda functions at predetermined times, much like an alarm clock that wakes up at the same time every day to perform its duty. Whether it’s the nightly backup of a database or the regular cleansing of outdated data, CloudWatch Events sends a signal to the corresponding Lambda function to execute the task.

Imagine a garden where the sprinkler system is set to water the plants at dawn and dusk automatically. This is the essence of serverless cron jobs. You schedule the tasks once, and like the sprinkler system, they run on their own, ensuring your garden — or in this case, your digital ecosystem — remains flourishing and healthy.

By offloading tasks such as database maintenance, inventory updates, or even the distribution of nightly reports to Lambda, companies can free up their valuable human resources for more creative and impactful work. AWS Lambda, with its serverless cron job capabilities, thus becomes an indispensable gardener, tending to the repetitive tasks that underpin operational health and business responsiveness.

Use Case 3: Event-Driven Architecture with SNS and SQS – Crafting Reactive Systems

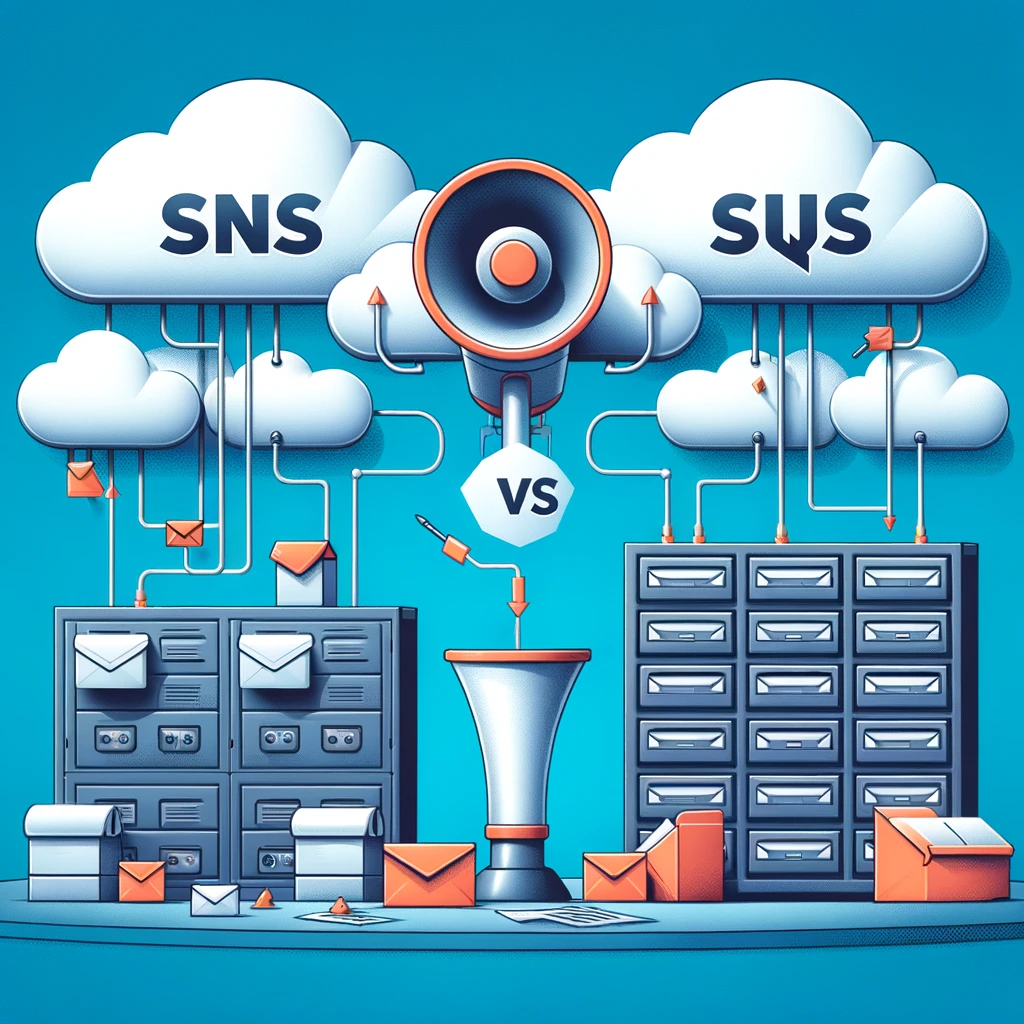

Event-Driven Architecture (EDA) is like the nervous system of the cloud ecosystem, and AWS Lambda’s integration with SNS (Simple Notification Service) and SQS (Simple Queue Service) acts as the synapses, facilitating swift and efficient communication. This paradigm is fundamental in constructing a system that’s both reactive and decoupled, where each component operates independently yet reacts to changes with precision.

Consider AWS Lambda as a responsive cell that springs into action upon receiving a signal. SNS is the herald, broadcasting messages to multiple subscribers, which can include Lambda functions, email addresses, or other endpoints. For example, when a new order is placed on an e-commerce platform, SNS announces this event, and Lambda functions across the system respond in concert, updating databases, initiating order processing, and triggering confirmation emails.

On the other side, SQS acts as a queue manager, ensuring that messages are processed in an orderly fashion. It’s the organized queue at a bank where customers are served one by one, maintaining order and efficiency. When messages arrive, such as updates from a stock trading application, SQS lines them up for Lambda functions to process one after another, ensuring that each trade is executed in the sequence it was received, preserving the integrity of transactions.

Businesses leverage these services to build resilient systems that scale dynamically with demand. A utility company might use SNS and SQS to handle sensor data from the grid, with Lambda functions analyzing readings in real-time, flagging anomalies, and automatically adjusting resource distribution to meet the current load. This setup not only enhances system resilience but also ensures scalability, as the workload increases, more Lambda instances are triggered, adapting to the load without human intervention.

Through the integration of SNS and SQS with Lambda, AWS empowers businesses to create systems that are not just robust and scalable, but also intelligent, responding to the ebb and flow of data and events as naturally as the human body responds to stimuli.

Use Case 4: File Processing with Amazon S3 – The Dynamic Duo of Efficiency

Imagine a world where the tedious task of file processing is as effortless as a leaf floating downstream, carried by the current to its destination without any hindrance. This is the reality of the synergy between AWS Lambda and Amazon S3 (Simple Storage Service) in file processing scenarios.

AWS Lambda and Amazon S3 come together like a skilled artisan and their toolbox. When a file is uploaded to S3—be it an image, a video, or a dataset—Lambda is like the craftsman who immediately sets to work, molding and shaping the raw material into something of greater value. This process is not queued for batch processing; it happens instantaneously, as if the artisan is always at the ready, tools in hand.

Let’s paint a picture with a real-life scenario: a popular photo-sharing application where users upload millions of images daily. As each photo lands in the S3 bucket, AWS Lambda springs into action like a diligent photolab technician. It resizes images to fit different device screens, compresses them for faster loading, and even applies filters as specified by the user. All of this occurs in the blink of an eye, giving users instant satisfaction as they continue to engage with the app.

Or consider the case of real-time log file processing. In this scenario, each log file deposited into S3 is a new chapter of information that Lambda reads, analyzes, and summarizes. It’s akin to an efficient secretary who takes comprehensive notes during a meeting and promptly provides a concise report.

This combination of AWS Lambda and Amazon S3 exemplifies the concept of serverless architecture, where scalability and responsiveness are inherent. It’s a paradigm that not only streamlines file processing but also revolutionizes it, enabling businesses to manage their data with unprecedented agility and insight.

Use Case 5: Step Functions and Glue Logic – Choreographing Workflow Precision

As we reach the zenith of our AWS Lambda exploration, let’s delve into the intricate ballet of Step Functions and their integral role in orchestrating complex workflows. AWS Step Functions stand as the grand conductor of an orchestra, directing the movement and timing of each section to create a harmonious symphony of actions.

Lambda functions are the virtuosos of this orchestral arrangement, acting as the glue logic that binds together the disparate elements of a process. They ensure that each transition is executed with grace and that any missteps are gracefully recovered from, like a seasoned dancer who effortlessly improvises to maintain the performance’s fluidity.

Consider the intricate dance of order fulfillment in an e-commerce setting. A customer’s click on the ‘buy’ button sets the workflow in motion, initiating a Step Function that charts the course from cart to delivery. Lambda functions interject at each juncture: validating payment information, updating inventory databases, notifying distribution centers, and finally, confirming shipment with the customer. Each function executes its role with precision, and if an error arises — perhaps a payment issue or an inventory shortfall — Lambda steps in to apply corrective measures without missing a beat.

Another scenario might involve multi-stage data analysis for a marketing campaign. Step Functions lay out the roadmap, from data collection to insight generation. Lambda functions clean the data, perform analytics, segment the audience, tailor the messaging, and eventually, evaluate the campaign’s impact. This well-coordinated sequence ensures that marketing teams have the insights they need to make data-driven decisions.

By leveraging AWS Step Functions and Lambda, organizations can choreograph their operations with the finesse of a ballet, where every step, every movement, is purposeful and in sync. This not only enhances efficiency but also elevates the capacity for innovation within workflow management.

The Road Ahead with AWS Lambda

As we draw the curtain on our journey through AWS Lambda’s landscape, let me take a moment to reflect on the versatility and robustness it injects into the world of cloud computing. Lambda is not just a tool; it’s a gateway to a future where efficiency and innovation are the cornerstones of digital solutions.

I encourage you, the trailblazers and architects of tomorrow’s technology, to weave these use cases into the fabric of your projects. Imagine the power at your fingertips when Lambda’s agility meets the comprehensive suite of AWS services. The result is a tapestry of solutions that are not only innovative but also seamlessly efficient.

For those who thirst for deeper knowledge, the AWS documentation provides a wealth of resources to further your understanding. Engage with the AWS community forums or explore the plethora of tutorials and case studies available online. Remember, the path to mastery is through continuous learning and sharing of knowledge.

In the spirit of a mentor guiding their protégés, I’ve endeavored to present these concepts with clarity and simplicity, to not just inform but to inspire. May this exploration of AWS Lambda spark ideas that you will mold into realities, pushing the boundaries of what’s possible in cloud computing. Embrace the serverless revolution, and let AWS Lambda propel your projects to new heights.