It was 11 PM on a Tuesday, and for the third time that week, we were on an emergency call. Not because our product had a critical bug, but because a routine deployment had, once again, mysteriously broken the internal DNS. Three of our sharpest engineers weren’t creating value; they were offering sacrifices to the capricious god of Istio, hoping it might bless our pods with connectivity.

As I stared at the sprawling diagram we’d made just to add a single, simple microservice, a heretical thought wormed its way into my brain: When did our job stop being about building software and become about… well, serving Kubernetes?

A rumor we couldn’t ignore

The next morning, amidst our collective YAML-induced hangover, someone dropped a screenshot into our team’s Slack channel. It was from some tiny, no-name startup, claiming they were getting 8x the performance of a standard Kubernetes stack at one-tenth of the cost.

The team’s reaction was a collective, cynical laugh. “Sure,” our lead SRE typed, “and my home server can out-render Pixar.” It was obviously marketing fluff. Propaganda. The post itself was deleted within an hour, but the screenshot had already gone viral. It was absurd, unbelievable, and we all knew it was nonsense.

But the idea, like a catchy, terrible pop song, got stuck in our heads.

Let’s just prove it’s impossible

That Friday, we decided to do it. We’d run a small, contained experiment, mostly for the bragging rights of publicly debunking the ridiculous claim. The plan was simple: take one of our standard, moderately complex services and see what it would take to run it on a simpler stack.

Our service, an image processor, wasn’t a behemoth, but its YAML file had grown… organically. Like a colony of particularly stubborn mold. It had sidecars, persistent volume claims, readiness probes, and enough annotations to qualify as a short novel.

Here’s a sanitized glimpse of the beast we were trying to tame:

# apiVersion: apps/v1

# kind: Deployment

# metadata:

# name: image-processor-svc

# labels:

# app: image-processor

# spec:

# replicas: 3

# selector:

# matchLabels:

# app: image-processor

# template:

# metadata:

# labels:

# app: image-processor

# spec:

# containers:

# - name: processor

# image: our-repo/image-processor:v1.2.4

# ports:

# - containerPort: 8080

# resources:

# requests:

# memory: "512Mi"

# cpu: "250m"

# limits:

# memory: "1024Mi"

# cpu: "500m"

# readinessProbe:

# httpGet:

# path: /healthz

# port: 8080

# initialDelaySeconds: 5

# periodSeconds: 10

# - name: metrics-sidecar

# image: prom/statsd-exporter:v0.22.0

# args:

# - "--statsd.mapping-config=/etc/statsd/mapper.yml"

# ports:

# - name: metrics

# containerPort: 9102

# volumeMounts:

# - name: config-volume

# mountPath: /etc/statsd

# # ...and so on, for another 150 lines.We figured it would take us all afternoon just to untangle it.

The uncomfortable silence of success

We set up a competing stack using Firecracker MicroVMs, essentially tiny, lightning-fast virtual machines. The goal was to run the same container, but without the entire Kubernetes universe orbiting around it.

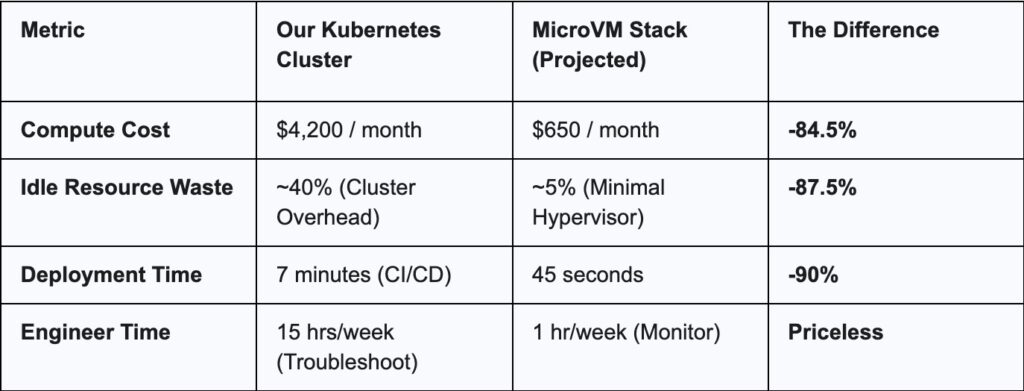

By 3 PM, we had our first results. And that’s when the room went quiet. It was the kind of uncomfortable silence you get when you realize the joke you’ve been telling for months is actually on you.

The numbers weren’t just holding up; they were embarrassing us. We stared at the Grafana dashboard, waiting for the figures to make sense. They didn’t. Our projected monthly cloud bill for this single service didn’t just shrink; it plummeted.

We had spent years building a complex, expensive, and fragile machine, all to solve a problem that, it turned out, could be handled with a much, much simpler approach.

Our quest for sane infrastructure

That weekend experiment turned into a full-blown obsession. Inspired, we started building our own internal escape hatch. We cobbled together a tool that we jokingly called the “SQL-ifier.” The premise was simple and, to a Kubernetes purist, utterly profane: what if you could manage infrastructure with simple, readable rules instead of YAML incantations?

Instead of a 200-line YAML file for an autoscaler, what if you could just write this?

-- This is our internal, SQL-like syntax for managing MicroVMs.

-- It's not a public standard (yet!).

-- Rule: If CPU usage on the frontend service is over 80% for 3 minutes,

-- add two more instances, but never exceed 20 total.

ON high_cpu(>80%) FOR 3m

IF service.name = 'image-processor-svc'

DO SCALE service.name TO instances + 2

LIMIT 20;

-- Rule: If we see more than 10 critical payment errors in 1 minute,

-- immediately revert to the last stable version.

ON log_error(level='critical', service='payment-gateway') > 10 FOR 1m

DO ROLLBACK service 'payment-gateway' TO previous_stable;It was declarative, readable, and, most importantly, it could be understood by a human being without needing a certification.

How did we all end up here?

This journey forced us to ask a bigger question. Why did we, and thousands of other smart teams, willingly chain ourselves to this complexity?

The answer is surprisingly human. We bought an 18-wheeler truck to do our weekly grocery shopping.

Sure, a giant truck can carry milk and eggs. But you spend most of your time finding a place to park it, paying for diesel, getting a special license to drive it, and explaining to your neighbors why you just flattened their mailbox. Kubernetes was built for Google-scale problems. Most of us run businesses that need the reliability of a Toyota Camry, not a fleet of space shuttles. We adopted the tool because everyone else did, mistaking its complexity for sophistication.

Is your infrastructure serving you?

This whole experience led us to create a simple “mirror test.” If you’re wondering if you’re in the same boat, ask your team these questions:

- Do you dread upgrading your cluster more than you dread a root canal?

- Is a significant portion of your engineering time spent on “infra-babysitting” instead of building product features?

- Could you confidently explain your service mesh configuration to a new hire without a whiteboard and a two-hour meeting?

If you answered “yes” to two or more, you might not have an infrastructure problem. You might have a Kubernetes problem.

This isn’t a manifesto to uninstall kubectl tomorrow. Some organizations genuinely operate at a scale where Kubernetes is not just useful, but necessary. This is just a friendly nudge. A reminder to look up from your YAML files once in a while and ask: is this tool still serving me, or have I started serving it?