You’ve done everything right. You wrote your Terraform config with the care of someone assembling IKEA furniture while mildly sleep-deprived. You double-checked your indentation (because yes, it matters). You even remembered to enable encryption, something your future self will thank you for while sipping margaritas on a beach far from production outages.

And then, just as you run terraform init, Terraform stares back at you like a cat that’s just been asked to fetch the newspaper.

Error: Failed to load state: NoSuchBucket: The specified bucket does not exist

But… you know the bucket exists. You saw it in the AWS console five minutes ago. You named it something sensible like company-terraform-states-prod. Or maybe you didn’t. Maybe you named it tf-bucket-please-dont-delete in a moment of vulnerability. Either way, it’s there.

So why is Terraform acting like you asked it to store your state in Narnia?

The truth is, Terraform’s S3 backend isn’t broken. It’s just spectacularly bad at telling you what’s wrong. It doesn’t throw tantrums, it just fails silently, or with error messages so vague they could double as fortune cookie advice.

Let’s decode its passive-aggressive signals together.

The backend block that pretends to listen

At the heart of remote state management lies the backend “s3” block. It looks innocent enough:

terraform {

backend "s3" {

bucket = "my-team-terraform-state"

key = "networking/main.tfstate"

region = "us-west-2"

dynamodb_table = "tf-lock-table"

encrypt = true

}

}Simple, right? But this block is like a toddler with a walkie-talkie: it only hears what it wants to hear. If one tiny detail is off, region, permissions, bucket name, it won’t say “Hey, your bucket is in Ohio but you told me it’s in Oregon.” It’ll just shrug and fail.

And because Terraform backends are loaded before variable interpolation, you can’t use variables inside this block. Yes, really. You’re stuck with hardcoded strings. It’s like being forced to write your grocery list in permanent marker.

The four ways Terraform quietly sabotages you

Over the years, I’ve learned that S3 backend errors almost always fall into one of four buckets (pun very much intended).

1. The credentials that vanished into thin air

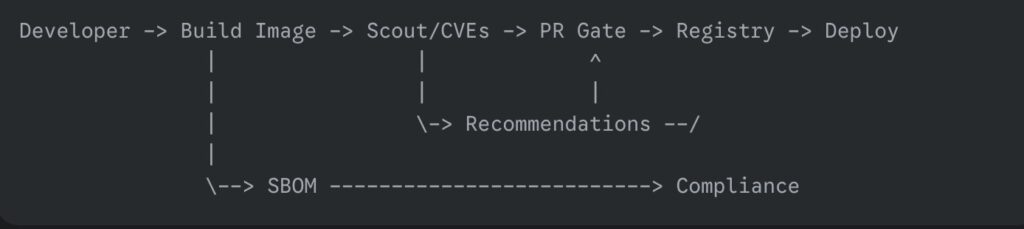

Terraform needs AWS credentials. Not “kind of.” Not “maybe.” It needs them like a coffee machine needs beans. But it won’t tell you they’re missing, it’ll just say the bucket doesn’t exist, even if you’re looking at it in the console.

Why? Because without valid credentials, AWS returns a 403 Forbidden, and Terraform interprets that as “bucket not found” to avoid leaking information. Helpful for security. Infuriating for debugging.

Fix it: Make sure your credentials are loaded via environment variables, AWS CLI profile, or IAM roles if you’re on an EC2 instance. And no, copying your colleague’s .aws/credentials file while they’re on vacation doesn’t count as “secure.”

2. The region that lied to everyone

You created your bucket in eu-central-1. Your backend says us-east-1. Terraform tries to talk to the bucket in Virginia. The bucket, being in Frankfurt, doesn’t answer.

Result? Another “bucket not found” error. Because of course.

S3 buckets are region-locked, but the error message won’t mention regions. It assumes you already know. (Spoiler: you don’t.)

Fix it: Run this to check your bucket’s real region:

aws s3api get-bucket-location --bucket my-team-terraform-stateThen update your backend block accordingly. And maybe add a sticky note to your monitor: “Regions matter. Always.”

3. The lock table that forgot to show up

State locking with DynamoDB is one of Terraform’s best features; it stops two engineers from simultaneously destroying the same VPC like overeager toddlers with a piñata.

But if you declare a dynamodb_table in your backend and that table doesn’t exist? Terraform won’t create it for you. It’ll just fail with a cryptic message about “unable to acquire state lock.”

Fix it: Create the table manually (or with separate Terraform code). It only needs one attribute: LockID (string). And make sure your IAM user has dynamodb:GetItem, PutItem, and DeleteItem permissions on it.

Think of DynamoDB as the bouncer at a club: if it’s not there, anyone can stumble in and start redecorating.

4. The missing safety nets

Versioning and encryption aren’t strictly required, but skipping them is like driving without seatbelts because “nothing bad has happened yet.”

Without versioning, a bad terraform apply can overwrite your state forever. No undo. No recovery. Just you, your terminal, and the slow realization that you’ve deleted production.

Enable versioning:

aws s3api put-bucket-versioning \

--bucket my-team-terraform-state \

--versioning-configuration Status=EnabledAnd always set encrypt = true. Your state file contains secrets, IDs, and the blueprint of your infrastructure. Treat it like your diary, not your shopping list.

Debugging without losing your mind

When things go sideways, don’t guess. Ask Terraform nicely for more details:

TF_LOG=DEBUG terraform initYes, it spits out a firehose of logs. But buried in there is the actual AWS API call, and the real error code. Look for lines containing AWS request or ErrorResponse. That’s where the truth hides.

Also, never run terraform init once and assume it’s locked in. If you change your backend config, you must run:

terraform init -reconfigureOtherwise, Terraform will keep using the old settings cached in .terraform/. It’s stubborn like that.

A few quiet rules for peaceful coexistence

After enough late-night debugging sessions, I’ve adopted a few personal commandments:

- One project, one bucket. Don’t mix dev and prod states in the same bucket. It’s like keeping your tax documents and grocery receipts in the same shoebox, technically possible, spiritually exhausting.

- Name your state files clearly. Use paths like prod/web.tfstate instead of final-final-v3.tfstate.

- Never commit backend configs with real bucket names to public repos. (Yes, people still do this. No, it’s not cute.)

- Test your backend setup in a sandbox first. A $0.02 bucket and a tiny DynamoDB table can save you a $10,000 mistake.

It’s not you, it’s the docs

Terraform’s S3 backend works beautifully, once everything aligns. The problem isn’t the tool. It’s that the error messages assume you’re psychic, and the documentation reads like it was written by someone who’s never made a mistake in their life.

But now you know its tells. The fake “bucket not found.” The silent region betrayal. The locking table that ghosts you.

Next time it acts up, don’t panic. Pour a coffee, check your region, verify your credentials, and whisper gently: “I know you’re trying your best.”

Because honestly? It is.