It all started with a coffee and a bill. My usual morning routine. But this particular Tuesday, the AWS bill had an extra kick that my espresso lacked. The cost for a handful of m5.large instances had jumped nearly 40% over the past year. I almost spat out my coffee.

I did what any self-respecting Cloud Architect does: I blamed myself. Did I forget to terminate a dev environment? Did I leave a data transfer running to another continent? But no. After digging through the labyrinth of Cost Explorer, the truth was simpler and far more sinister: EC2 was quietly getting more expensive. Spot instances had become as predictable as a cat on a hot tin plate, and my “burstable” CPUs seemed to run out of breath if they had to do more than jog for a few minutes.

EC2, our old, reliable friend. The bedrock of the cloud. It felt like watching your trusty old car suddenly start demanding premium fuel and imported spare parts just to get to the grocery store. Something was off.

And then, it happened. A slip-up in a public Reddit forum. A senior AWS engineer accidentally posted a file named ec2-phaseout-q4–2027.pdf. It was deleted in minutes, but the internet, as we know, has the memory of an elephant with a grudge.

(Disclaimer for the nervous: This PDF is my narrative device. A ghost in the machine. A convenient plot twist. But the trends it points to? The rising costs, the architectural creaks? Those are very, very real. Now, where were we?)

The document was a bombshell. It laid out a plan to deprecate over 80% of current EC2 instance families by the end of 2027, paving the way for a “next-gen compute platform.” Was this real? I made some calls. The first partner laughed it off. The second went quiet, a little too quiet. The third, after I promised to buy them beers for a month, whispered: “We’re already planning the transition for our enterprise clients.”

Bingo.

Why our beloved EC2 is becoming a museum piece

My lead engineer summed it up beautifully last week. “Running real-time ML on today’s EC2,” he sighed, “feels like asking a 2010 laptop to edit 4K video. It’ll do it, but it’ll scream in agony the whole time, and you’d better have a fire extinguisher handy.”

He’s not wrong. For general-purpose apps, EC2 is still a trusty workhorse. But for the demanding, high-performance workloads that are becoming the norm? You can practically see the gray hairs and hear the joints creaking.

This isn’t just about cost. It’s about architecture. EC2 was built for a different era, an era before serverless was cool, before WebAssembly (WASM) was a thing, and before your toaster needed to run a Kubernetes cluster. The cracks are starting to show.

Meet AWS Nucleus, the secret successor

No press release. No re:Invent keynote. But if you’re connected to AWS insiders, you’ve probably heard whispers of a project internally codenamed “Nucleus.” We got access to this stealth-mode compute platform, and it’s unlike anything we’ve used before.

What does it feel like? Think of it this way: if Lambda and Fargate had a baby, and that baby was raised by a bare-metal server with a PhD in performance, you’d get Nucleus. It has the speed and direct hardware access of a dedicated machine, but with the auto-scaling magic of serverless.

Here are some of the early capabilities we’ve observed:

- No more cold starts. Unlike Lambda, which can sometimes feel like it’s waking up from a deep nap.

- Direct hardware access. Full control over GPU and SSD resources without the usual virtualization overhead.

- Predictive autoscaling. It analyzes traffic patterns and scales before the spike hits, not during.

- WASM-native runtime. Support for Node.js, Python, Go, and Rust is baked in from the ground up.

It’s not generally available yet, but internal teams and a select few partners are already building on it.

A 30-day head-to-head test

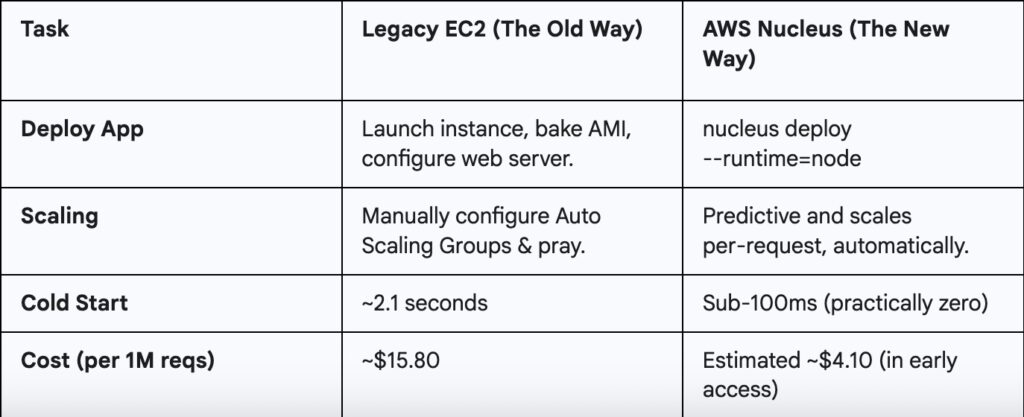

Yes, we triple checked those cost figures. Even if AWS adjusts the pricing after the preview, the efficiency gap is too massive to ignore.

Your survival guide for the coming shift

Let’s be clear, there’s no need to panic and delete all your EC2 instances. But if this memo is even half-right, you don’t want to be caught flat-footed in a few years. Here’s what we’re doing, and what you might want to start experimenting with.

Step 1: Become a cloud whisperer

Start by pinging your AWS Solutions Architect, not directly about “Nucleus,” but something softer:

“Hey, we’re exploring options for more performant, cost-effective compute. Are there any next-gen runtimes or private betas AWS is piloting that we could look into?”

You’ll be surprised what folks share if you ask the right way.

Step 2: test on the shadow platform

Some partners already have early access CLI builds. If you get your hands on one, you’ll notice some familiar patterns.

# Initialize a new service from a template

nucleus init my-api --template=fastapi

# Deploy with a single command

nucleus deploy --env=staging --free-tierDisclaimer: Not officially available. Use in isolated test environments only. Do not run your production database on this.

Step 3: Run a hybrid setup

If you get preview access, try bridging the old with the new. Here’s a hypothetical Terraform snippet of what that might look like:

# Our legacy EC2 instance for the old monolith

resource "aws_instance" "legacy_worker" {

ami = "ami-0b5eea76982371e9" # An old Amazon Linux 2 AMI

instance_type = "t3.medium"

}

# The new Nucleus service for a microservice

resource "aws_nucleus_service" "new_api" {

runtime = "go1.19"

source_path = "./app/api"

# This is the magic part: linking to the old world

vpc_ec2_links = [aws_instance.legacy_worker.id]

}We ran a few test loads between legacy workers and the new compute, no regressions, and latency even dropped.

Step 4: Estimate the savings yourself

Even with preview pricing, the gap is noticeable. A simple Python script can give you a rough idea.

# Fictional library to estimate costs

import aws_nucleus_estimator

# Your current monthly bill for a specific workload

current_ec2_cost = 4200

# Estimate based on vCPU hours and memory

# (These numbers are for illustration only)

estimated_nucleus_cost = aws_nucleus_estimator.estimate(

vcpu_hours=1200,

memory_gb_hours=2400

)

print(f"Rough monthly savings: ${current_ec2_cost - estimated_nucleus_cost}")This is bigger than just EC2

Let’s be honest. This shift isn’t just about cutting costs or shrinking cold start times. It’s about redefining what “compute” even means. EC2 isn’t being deprecated because it’s broken. It’s being phased out because modern workloads have evolved, and the old abstractions are starting to feel like training wheels we forgot to take off.

A broader pattern is emerging across the industry. What AWS is allegedly doing with Nucleus mirrors a larger movement:

- Google Cloud is reportedly piloting a Cloud Run variant that uses a WASM-based runtime.

- Microsoft Azure is quietly testing a system to blur the line between containers and functions.

- Oracle, surprisingly, has been sponsoring development tools optimized for WASM-native environments.

The foundational idea is clear: cloud platforms are moving toward fast-boot, auto-scaling, WASM-capable compute that sits somewhere between Lambda and Kubernetes, but without the overhead of either.

Is EC2 the new legacy?

It’s strange to say, but EC2 is starting to feel like “bare metal” did a decade ago: powerful, essential, but something you try to abstract away.

One of our SREs shared this gem the other day:

“A couple of our junior engineers thought EC2 was some kind of disaster recovery tool for Kubernetes.”

That’s from a Fortune 100 company. When your flagship infrastructure service starts raising eyebrows from fresh grads, you know a generational shift is underway.

The cloud is evolving, again. But this isn’t a gentle, planned succession. It’s a Cambrian explosion in real-time. New, bizarre forms of compute are crawling out of the digital ooze, and the old titans, once thought invincible, are starting to look slow and clumsy. They don’t get a gold watch and a retirement party. They become fossils, their skeletons propping up the new world.

EC2 isn’t dying tomorrow. It’s becoming a geological layer. It’s the bedrock, the sturdy but unglamorous foundation upon which nimbler, more specialized predators will hunt. The future isn’t about killing the virtual machine; it’s about making it an invisible implementation detail. In the same way, most of us stopped thinking about the physical server racks in a data center, we’ll soon stop thinking about the VM. We’ll just care about the work that needs doing.

So no, EC2 isn’t dying. It’s becoming a legend. And in the fast-moving world of technology, legends belong in museums, admired from a safe distance.