Let’s be honest. You’ve typed 127.0.0.1 more times than you’ve called your own mother. We treat it like the sole, heroic occupant of the digital island we call localhost. It’s the only phone number we know by heart, the only doorbell we ever ring.

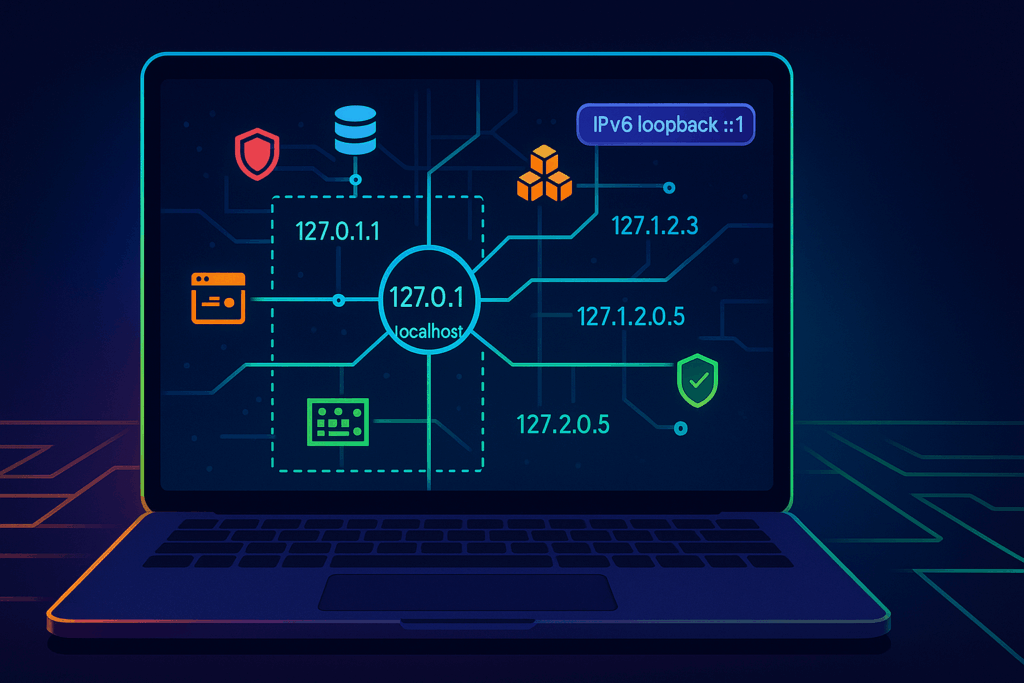

Well, brace yourself for a revelation that will fundamentally alter your relationship with your machine. 127.0.0.1 is not alone. In fact, it lives in a sprawling, chaotic metropolis with over 16 million other addresses, all of them squatting inside your computer, rent-free.

Ignoring these neighbors condemns you to a life of avoidable port conflicts and flimsy localhost tricks. But give them a chance, and you’ll unlock cleaner dev setups, safer tests, and fewer of those classic “Why is my test API saying hello to the entire office Wi-Fi?” moments of sheer panic.

So buckle up. We’re about to take the scenic tour of the neighborhood that the textbooks conveniently forgot to mention.

Your computer is secretly a megacity

The early architects of the internet, in their infinite wisdom, set aside the entire 127.0.0.0/8 block of addresses for this internal monologue. That’s 16,777,216 unique addresses, from 127.0.0.1 all the way to 127.255.255.254. Every single one of them is designed to do one thing: loop right back to your machine. It’s the ultimate homebody network.

Think of your computer not as a single-family home with one front door, but as a gigantic apartment building with millions of mailboxes. And for years, you’ve been stubbornly sending all your mail to apartment #1.

Most operating systems only bother to introduce you to 127.0.0.1, but the kernel knows the truth. It treats any address in the 127.x.y.z range as a VIP guest with an all-access pass back to itself. This gives you a private, internal playground for wiring up your applications.

A handy rule of thumb? Any address starting with 127 is your friend. 127.0.0.2, 127.10.20.30, even 127.1.1.1, they all lead home.

Everyday magic tricks with your newfound neighbors

Once you realize you have a whole city at your disposal, you can stop playing port Tetris. Here are a few party tricks your localhost never told you it could do.

The art of peaceful coexistence

We’ve all been there. It’s 2 AM, and two of your microservices are having a passive-aggressive standoff over port 8080. They both want it, and neither will budge. You could start juggling ports like a circus performer, or you could give them each their own house.

Assign each service its own loopback address. Now they can both listen on port 8080 without throwing a digital tantrum.

First, give your new addresses some memorable names in your /etc/hosts file (or C:\Windows\System32\drivers\etc\hosts on Windows).

# /etc/hosts

127.0.0.1 localhost

127.0.1.1 auth-service.local

127.0.1.2 inventory-service.localNow, you can run both services simultaneously.

# Terminal 1: Start the auth service

$ go run auth/main.go --bind 127.0.1.1:8080

# Terminal 2: Start the inventory service

$ python inventory/app.py --host 127.0.1.2 --port 8080Voilà. http://auth-service.local:8080 and http://inventory-service.local:8080 are now living in perfect harmony. No more port drama.

The safety of an invisible fence

Binding a service to 0.0.0.0 is the developer equivalent of leaving your front door wide open with a neon sign that says, “Come on in, check out my messy code, maybe rifle through my database.” It’s convenient, but it invites the entire network to your private party.

Binding to a 127.x.y.z address, however, is like building an invisible fence. The service is only accessible from within the machine itself. This is your insurance policy against accidentally exposing a development database full of ridiculous test data to the rest of the company.

Advanced sorcery for the brave

Ready to move beyond the basics? Treating the 127 block as a toolkit unlocks some truly powerful patterns.

Taming local TLS

Testing services that require TLS can be a nightmare. With your new loopback addresses, it becomes trivial. You can create a single local Certificate Authority (CA) and issue a certificate with Subject Alternative Names (SANs) for each of your local services.

# /etc/hosts again

127.0.2.1 api-gateway.secure.local

127.0.2.2 user-db.secure.local

127.0.2.3 billing-api.secure.localNow, api-gateway.secure.local can talk to user-db.secure.local over HTTPS, with valid certificates, all without a single packet leaving your laptop. This is perfect for testing mTLS, SNI, and other scenarios where your client needs to be picky about its connections.

Concurrent tests without the chaos

Running automated acceptance tests that all expect to connect to a database on port 5432 can be a race condition nightmare. By pinning each test runner to its own unique 127 address, you can spin them all up in parallel. Each test gets its own isolated world, and your CI pipeline finishes in a fraction of the time.

The fine print and other oddities

This newfound power comes with a few quirks you should know about. This is the part of the tour where we point out the strange neighbor who mows his lawn at midnight.

- The container dimension: Inside a Docker container, 127.0.0.1 refers to the container itself, not the host machine. It’s a whole different loopback universe in there. To reach the host from a container, you need to use the special gateway address provided by your platform (like host.docker.internal).

- The IPv6 minimalist: IPv6 scoffs at IPv4’s 16 million addresses. For loopback, it gives you one: ::1. That’s it. This explains the classic mystery of “it works with 127.0.0.1 but fails with localhost.” Often, localhost resolves to ::1 first, and if your service is only listening on IPv4, it won’t answer the door. The lesson? Be explicit, or make sure your service listens on both.

- The SSRF menace: If you’re building security filters to prevent Server-Side Request Forgery (SSRF), remember that blocking just 127.0.0.1 is like locking the front door but leaving all the windows open. You must block the entire 127.0.0.0/8 range and ::1.

Your quick start eviction notice for port conflicts

Ready to put this into practice? Here’s a little starter kit you can paste today.

First, add some friendly names to your hosts file.

# Add these to your /etc/hosts file

127.0.10.1 api.dev.local

127.0.10.2 db.dev.local

127.0.10.3 cache.dev.localNext, on Linux or macOS, you can formally add these as aliases to your loopback interface. This isn’t always necessary for binding, but it’s tidy.

# For Linux

sudo ip addr add 127.0.10.1/8 dev lo

sudo ip addr add 127.0.10.2/8 dev lo

sudo ip addr add 127.0.10.3/8 dev lo

# For macOS

sudo ifconfig lo0 alias 127.0.10.1

sudo ifconfig lo0 alias 127.0.10.2

sudo ifconfig lo0 alias 127.0.10.3Now, you can bind three different services, all to their standard ports, without a single collision.

# Run your API on its default port

api-server --bind api.dev.local:3000

# Run Postgres on its default port

postgres -D /path/to/data -c listen_addresses=db.dev.local

# Run Redis on its default port

redis-server --bind cache.dev.localCheck that everyone is home and listening.

# Check the API

curl http://api.dev.local:3000/health

# Check the database (requires psql client)

psql -h db.dev.local -U myuser -d mydb -c "SELECT 1"

# Check the cache

redis-cli -h cache.dev.local ping

# Expected output: PONGWelcome to the neighborhood

Your laptop isn’t a one-address town; it’s a small city with streets you haven’t named and doors you haven’t opened. For too long, you’ve been forcing all your applications to live in a single, crowded, noisy studio apartment at 127.0.0.1. The database is sleeping on the couch, the API server is hogging the bathroom, and the caching service is eating everyone else’s food from the fridge. It’s digital chaos.

Giving each service its own loopback address is like finally moving them into their own apartments in the same building. It’s basic digital hygiene. Suddenly, there’s peace. There’s order. You can visit each one without tripping over the others. You stop being a slumlord for your own processes and become a proper city planner.

So go ahead, break the monogamous, and frankly codependent, relationship you’ve had with 127.0.0.1. Explore the neighborhood. Hand out a few addresses. Let your development environment behave like a well-run, civilized society instead of a digital mosh pit. Your sanity and your services will thank you for it. After all, good fences make good neighbors, even when they’re all living inside your head.