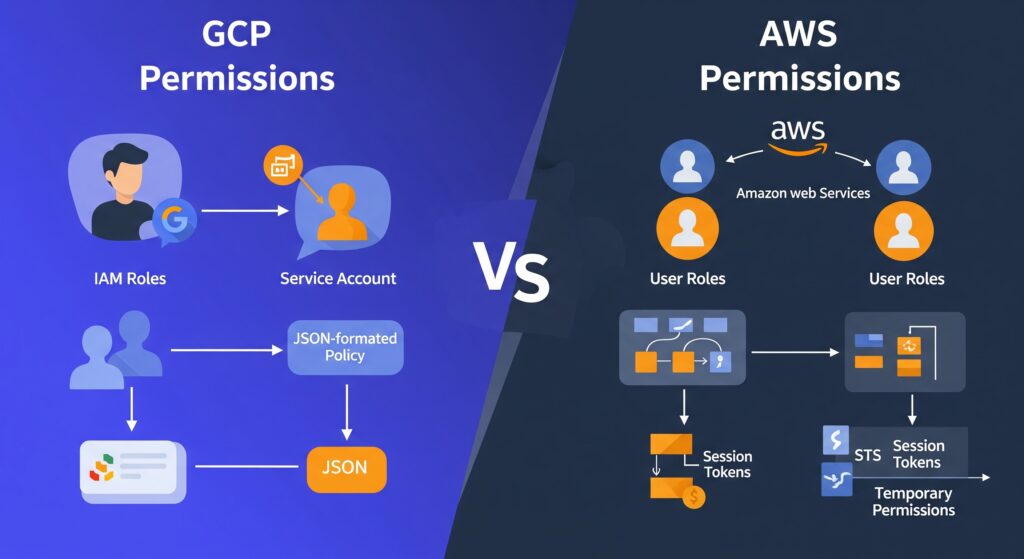

The digital world we’ve built in the cloud, brimming with applications and data, doesn’t just run on good intentions. It relies on robust, thoughtfully designed security. Protecting your workloads, whether a simple website or a sprawling enterprise system, isn’t just an add-on; it’s the bedrock. Both Amazon Web Services (AWS) and Google Cloud (GCP) are titans in this space, and both are deeply committed to security. Yet, when it comes to managing the flow of network traffic, who gets in, who gets out, they approach the task with distinct philosophies and toolsets. This guide explores these differences, aiming to offer a clearer path as you navigate their distinct approaches to network protection.

Let’s set the scene with a familiar concept: securing a bustling apartment complex. AWS, in this scenario, provides a two-tier security system. You have vigilant guards stationed at the main entrance to the entire neighborhood (these are your Network ACLs), checking everyone coming and going from the broader area. Then, each individual apartment building within that neighborhood has its own dedicated doorman (your Security Groups), working from a specific guest list for that building alone.

GCP, on the other hand, operates more like a highly efficient central security office for the entire complex. They manage a master digital key system that controls access to every single apartment door (your VPC Firewall Rules). If your name isn’t on the approved list for Apartment 3B, you simply don’t get in. And to ensure overall order, the building management (think Hierarchical Firewall Policies) can also lay down some general community guidelines that apply to everyone.

The AWS approach, two levels of security

Venturing into the AWS ecosystem, you’ll encounter its distinct, layered strategy for network defense.

Security Groups, your instances personal guardian

First up are Security Groups. These act as the personal guardian for your individual resources, like your EC2 virtual servers or your RDS databases, operating right at their virtual doorstep.

A key characteristic of these guardians is that they are stateful. What does this mean in everyday terms? Picture a friendly doorman. If he sees you (your application) leave your apartment to run an errand (make an outbound connection), he’ll recognize you when you return and let you straight back in (allow the inbound response) without needing to re-check your credentials. It’s this “memory” of the connection that defines statefulness.

By default, a new Security Group is cautious: it won’t allow any unsolicited inbound traffic, but it’s quite permissive about outbound connections. Crucially, this doorman only works with “allow” lists. You provide a list of who is permitted; you don’t give them a separate list of who to explicitly turn away.

Network ACLs, the subnets border patrol

The second layer in AWS is the Network Access Control List, or NACL. This acts as the border patrol for an entire subnet, a segment of your network. Any resource residing within that subnet is subject to the NACL’s rules.

Unlike the doorman-like Security Group, the NACL border patrol is stateless. This means they have no memory of past interactions. Every packet of data, whether entering or leaving the subnet, is inspected against the rule list as if it’s the first time it’s been seen. Consequently, you must create explicit rules for both inbound traffic and outbound traffic, including any return traffic for connections initiated from within. If you allow a request out, you must also explicitly allow the expected response back in.

NACLs give you the power to create both “allow” and “deny” rules, and these rules are processed in numerical order, the lowest numbered rule that matches the traffic gets applied. The default NACL that comes with your AWS virtual network is initially wide open, allowing all traffic in and out. Customizing this is a key security step.

GCPs unified firewall strategy

Shifting our focus to Google Cloud, we find a more consolidated approach to network security, primarily orchestrated through its VPC Firewall Rules.

Centralized command VPC Firewall Rules

GCP largely centralizes its network traffic control into what it calls VPC (Virtual Private Cloud) Firewall Rules. This is your main toolkit for defining who can talk to whom. These rules are defined at the level of your entire VPC network, but here’s the important part: they are enforced right at each individual Virtual Machine (VM) instance. It’s like the central security office sets the master rules, but each VM’s own “door” (its network interface) is responsible for upholding them. This provides granular control without the explicit two-tier system seen in AWS.

Another point to note is that GCP’s VPC networks are global resources. This means a single VPC can span multiple geographic regions, and your firewall rules can be designed with this global reach in mind, or they can be tailored to specific regions or zones.

Decoding GCPs rulebook

Let’s look at the characteristics of these VPC Firewall Rules:

- Stateful by default: Much like the AWS Security Group’s friendly doorman, GCP’s firewall rules are inherently stateful for allowed connections. If you permit an outbound connection from one of your VMs, the system intelligently allows the return traffic for that specific conversation.

- The power of allow and deny: Here’s a significant distinction. GCP’s primary firewall system allows you to create both “allow” rules and explicit “deny” rules. This means you can use the same mechanism to say “you’re welcome” and “you’re definitely not welcome,” a capability that in AWS often requires using the stateless NACLs for explicit denies.

- Priority is paramount: Every firewall rule in GCP has a numerical priority (lower numbers signify higher precedence). When network traffic arrives, GCP evaluates rules in order of this priority. The first rule whose criteria match the traffic determines the action (allow or deny). Think of it as a clearly ordered VIP list for your network access.

- Targeting with precision: You don’t have to apply rules to every VM. You can pinpoint their application to:

.- All instances within your VPC network.

.- Instances tagged with specific Network Tags (e.g., applying a “web-server” tag to a group of VMs and crafting rules just for them).

.- Instances running with particular Service Accounts.

Hierarchical policies, governance from above

Beyond the VPC-level rules, GCP offers Hierarchical Firewall Policies. These allow you to set broader security mandates at the Organization or Folder level within your GCP resource hierarchy. These top-level rules then cascade down, influencing or enforcing security postures across multiple projects and VPCs. It’s akin to the overall building management or a homeowners association setting some fundamental security standards that everyone in the complex must adhere to, regardless of their individual apartment’s specific lock settings.

AWS and GCP, how their philosophies differ

So, when you stand back, what are the core philosophical divergences?

AWS presents a distinctly layered security model. You have Security Groups acting as stateful firewalls directly attached to your instances, and then you have Network ACLs as a stateless, broader brush at the subnet boundary. This separation allows for independent configuration of these two layers.

GCP, in contrast, leans towards a more unified and centralized model with its VPC Firewall Rules. These rules are stateful by default (like Security Groups) but also incorporate the ability to explicitly deny traffic (a characteristic of NACLs). The enforcement is at the instance level, providing that fine granularity, but the rule definition and management feel more consolidated. The Hierarchical Policies then add a layer of overarching governance.

Essentially, GCP’s VPC Firewall Rules aim to provide the capabilities of both AWS Security Groups and some aspects of NACLs within a single, stateful framework.

Practical impacts, what this means for you

Understanding these architectural choices has real-world consequences for how you design and manage your network security.

- Stateful deny is a GCP convenience: One notable practical difference is how you handle explicit “deny” scenarios. In GCP, creating a stateful “deny” rule is straightforward. If you want to block a specific group of VMs from making outbound connections on a particular port, you create a deny rule, and the stateful nature means you generally don’t have to worry about inadvertently blocking legitimate return traffic for other allowed connections. In AWS, achieving an explicit, targeted deny often involves using the stateless NACLs, which requires more careful management of return traffic.

A peek at default settings:

- AWS: When you launch a new EC2 instance, its default Security Group typically blocks all incoming traffic (no uninvited guests) but allows all outgoing traffic (meaning your instance has the permission to reach out, and if it’s in a public subnet with a route to an Internet Gateway, it can indeed connect to the internet). The default NACL for your subnet, however, starts by allowing all traffic in and out. So, your instance’s “doorman” is initially strict, but the “neighborhood gate” is open.

- GCP: A new GCP VPC network has implied rules: deny all incoming traffic and allow all outgoing traffic. However, if you use the “default” network that GCP often creates for new projects, it comes with some pre-populated permissive firewall rules, such as allowing SSH access from any IP address. It’s like your new apartment has a few general visitor passes already active; you’ll want to review these and decide if they fit your security posture. review these and decide if they fit your security posture.

- Seeing the traffic flow logging and monitoring: Both platforms offer ways to see what your network guards are doing. AWS provides VPC Flow Logs, which can capture information about the IP traffic going to and from network interfaces in your VPC. GCP also has VPC Flow Logs, and importantly, its Firewall Rules Logging feature allows you to log when specific firewall rules are hit, giving you direct insight into which rules are allowing or denying traffic.

Real-world scenario blocking web access

Let’s make this concrete. Suppose you want to prevent a specific set of VMs from accessing external websites via HTTP (port 80) and HTTPS (port 443).

In GCP:

- You would create a single VPC Firewall Rule.

- Set its Direction to Egress (for outgoing traffic).

- Set the Action on match to Deny.

- For Targets, you’d specify your VMs, perhaps using a network tag like “no-web-access”.

- For Destination filters, you’d typically use 0.0.0.0/0 (to apply to all external destinations).

- For Protocols and ports, you’d list tcp:80 and tcp:443.

- You’d assign this rule a Priority that is numerically lower (meaning higher precedence) than any general “allow outbound” rules that might exist, ensuring this deny rule is evaluated first.

This approach is quite direct. The rule explicitly denies the specified outbound traffic for the targeted VMs, and GCP’s stateful handling simplifies things.

In AWS:

To achieve a similar explicit block, you would most likely turn to Network ACLs:

- You’d identify or create an NACL associated with the subnet(s) where your target EC2 instances reside.

- You would add outbound rules to this NACL to explicitly Deny traffic destined for TCP ports 80 and 443 from the source IP range of your instances (or 0.0.0.0/0 from those instances if they are NATed).

- Because NACLs are stateless, you’d also need to ensure your inbound NACL rules don’t inadvertently block legitimate return traffic for other connections if you’re not careful, though for an outbound deny, the primary concern is the outbound rule itself.

Alternatively, with Security Groups in AWS, you wouldn’t create an explicit “deny” rule. Instead, you would ensure that no outbound rule in any Security Group attached to those instances allows traffic on TCP ports 80 and 443 to 0.0.0.0/0. If there’s no “allow” rule, the traffic is implicitly denied by the Security Group. This is less of an explicit block and more of a “lack of permission.”

The AWS method, particularly if relying on NACLs for the explicit deny, often requires a bit more careful consideration of the stateless nature and rule ordering.

Charting your cloud security course

So, we’ve seen that AWS and GCP, while both aiming for robust network security, take different paths to get there. AWS offers a distinctly layered defense: Security Groups serve as your instance-specific, stateful guardians, while Network ACLs provide a broader, stateless patrol at your subnet borders. This gives you two independent levers to pull.

GCP, conversely, champions a more unified system with its VPC Firewall Rules. These are stateful, apply at the instance level, and critically, incorporate the ability to explicitly deny traffic, consolidating functionalities that are separate in AWS. The addition of Hierarchical Firewall Policies then allows for overarching governance.

Neither of these architectural philosophies is inherently superior. They represent different ways of thinking about the same fundamental challenge: controlling network traffic. The “best” approach is the one that aligns with your organization’s operational preferences, your team’s expertise, and the specific security requirements of your applications.

By understanding these core distinctions, the layers, the statefulness, and the locus of control, you’re better equipped. You’re not just choosing a cloud provider; you’re consciously architecting your digital defenses, rule by rule, ensuring your corner of the cloud remains secure and resilient.