It’s 4:53 PM on a Friday. You’re pushing a one-line change to an IAM policy. A change so trivial, so utterly benign, that you barely give it a second thought. You run terraform apply, lean back in your chair, and dream of the weekend. Then, your terminal returns a greeting from the abyss: Error acquiring state lock.

Somewhere across the office, or perhaps across the country, a teammate has just started a plan on their own, seemingly innocuous change. You are now locked in a digital standoff. The weekend is officially on hold. Your shared Terraform state file, once a symbol of collaboration and a single source of truth, has become a temperamental roommate who insists on using the kitchen right when you need to make dinner. And they’re a very, very slow cook.

Our Terraform honeymoon phase

It wasn’t always like this. Most of us start our Terraform journey in a state of blissful simplicity. Remember those early days? A single, elegant main.tf file, a tidy remote backend in an S3 bucket, and a DynamoDB table to handle the locking. It was the infrastructure equivalent of a brand-new, minimalist apartment. Everything had its place. Deployments were clean, predictable, and frankly, a little bit boring.

Our setup looked something like this, a testament to a simpler time:

# in main.tf

terraform {

backend "s3" {

bucket = "our-glorious-infra-state-prod"

key = "global/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "terraform-state-lock-prod"

encrypt = true

}

}

resource "aws_vpc" "main" {

cidr_block = "10.0.0.0/16"

# ... and so on

}It worked beautifully. Until it didn’t. The problem with minimalist apartments is that they don’t stay that way. You add a person, then another. You buy more furniture. Soon, you’re tripping over things, and that one clean kitchen becomes a chaotic battlefield of conflicting needs.

The kitchen gets crowded

As our team and infrastructure grew, our once-pristine state file started to resemble a chaotic shared kitchen during rush hour. The initial design, meant for a single chef, was now buckling under the pressure of a full restaurant staff.

The state lock standoff

The first and most obvious symptom was the state lock. It’s less of a technical “race condition” and more of a passive-aggressive duel between two colleagues who both need the only good frying pan at the exact same time. The result? Burnt food, frayed nerves, and a CI/CD pipeline that spends most of its time waiting in line.

The mystery of the shared spice rack

With everyone working out of the same state file, we lost any sense of ownership. It became a communal spice rack where anyone could move, borrow, or spill things. You’d reach for the salt (a production security group) only to find someone had replaced it with sugar (a temporary rule for a dev environment). Every Terraform apply felt like a gamble. You weren’t just deploying your change; you were implicitly signing off on the current, often mysterious, state of the entire kitchen.

The pre-apply prayer

This led to a pervasive culture of fear. Before running an apply, engineers would perform a ritualistic dance of checks, double-checks, and frantic Slack messages: “Hey, is anyone else touching prod right now?” The Terraform plan output would scroll for pages, a cryptic epic poem of changes, 95% of which had nothing to do with you. You’d squint at the screen, whispering a little prayer to the DevOps gods that you wouldn’t accidentally tear down the customer database because of a subtle dependency you missed.

The domino effect of a single spilled drink

Worst of all was the tight coupling. Our infrastructure became a house of cards. A team modifying a network ACL for their new microservice could unintentionally sever connectivity for a legacy monolith nobody had touched in years. It was the architectural equivalent of trying to change a lightbulb and accidentally causing the entire building’s plumbing to back up.

An uncomfortable truth appears

For a while, we blamed Terraform. We complained about its limitations, its verbosity, and its sharp edges. But eventually, we had to face an uncomfortable truth: the tool wasn’t the problem. We were. Our devotion to the cult of the single centralized state—the idea that one file to rule them all was the pinnacle of infrastructure management—had turned our single source of truth into a single point of failure.

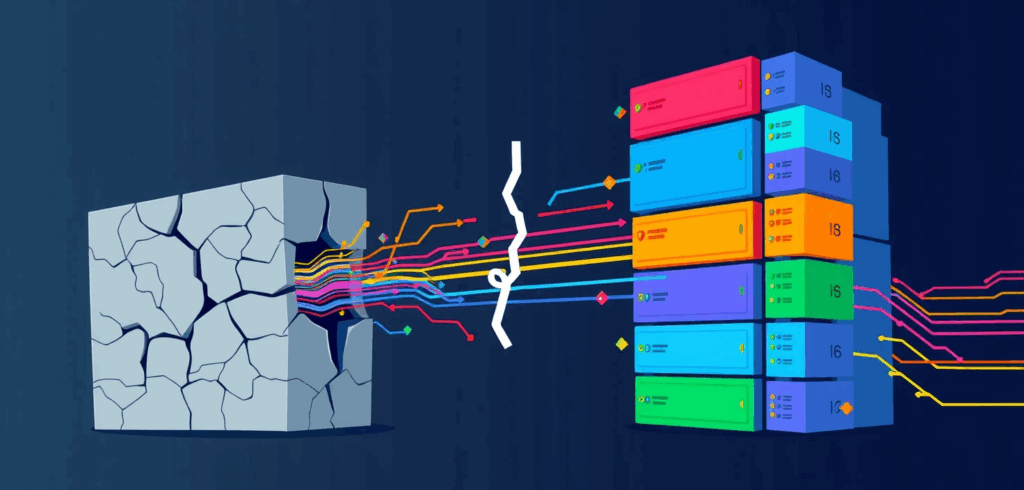

The great state breakup

The solution was as terrifying as it was liberating: we had to break up with our monolithic state. It was time to move out of the chaotic shared house and give every team their own well-equipped studio apartment.

Giving everyone their own kitchenette

First, we dismantled the monolith. We broke our single Terraform configuration into dozens of smaller, isolated stacks. Each stack managed a specific component or application, like a VPC, a Kubernetes cluster, or a single microservice’s infrastructure. Each had its own state file.

Our directory structure transformed from a single folder into a federation of independent projects:

infra/

├── networking/

│ ├── vpc.tf

│ └── backend.tf # Manages its own state for the VPC

├── databases/

│ ├── rds-main.tf

│ └── backend.tf # Manages its own state for the primary RDS

└── services/

├── billing-api/

│ ├── ecs-service.tf

│ └── backend.tf # Manages state for just the billing API

└── auth-service/

├── iam-roles.tf

└── backend.tf # Manages state for just the auth serviceThe state lock standoffs vanished overnight. Teams could work in parallel without tripping over each other. The blast radius of any change was now beautifully, reassuringly small.

Letting infrastructure live with its application

Next, we embraced GitOps patterns. Instead of a central infrastructure repository, we decided that infrastructure code should live with the application it supports. It just makes sense. The code for an API and the infrastructure it runs on are a tightly coupled couple; they should live in the same house. This meant code reviews for application features and infrastructure changes happened in the same pull request, by the same team.

Tasting the soup before serving it

Finally, we made surprises a thing of the past by validating plans before they ever reached the main branch. We set up simple CI workflows that would run a Terraform plan on every pull request. No more mystery meat deployments. The plan became a clear, concise contract of what was about to happen, reviewed and approved before merge.

A snippet from our GitHub Actions workflow looked like this:

name: 'Terraform Plan Validation'

on:

pull_request:

paths:

- 'infra/**'

- '.github/workflows/terraform-plan.yml'

jobs:

plan:

name: 'Terraform Plan'

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Setup Terraform

uses: hashicorp/setup-terraform@v3

with:

terraform_version: 1.5.0

- name: Terraform Init

run: terraform init -backend=false

- name: Terraform Plan

run: terraform plan -no-colorStories from the other side

This wasn’t just a theoretical exercise. A fintech firm we know split its monolithic repo into 47 micro-stacks. Their deployment speed shot up by 70%, not because they wrote code faster, but because they spent less time waiting and untangling conflicts. Another startup moved from a central Terraform setup to the AWS CDK (TypeScript), embedding infra in their app repos. They cut their time-to-deploy in half, freeing their SRE team from being gatekeepers and allowing them to become enablers.

Guardrails not gates

Terraform is still a phenomenally powerful tool. But the way we use it has to evolve. A centralized remote state, when not designed for scale, becomes a source of fragility, not strength. Just because you can put all your eggs in one basket doesn’t mean you should, especially when everyone on the team needs to carry that basket around.

The most scalable thing you can do is let teams build independently. Give them ownership, clear boundaries, and the tools to validate their work. Build guardrails to keep them safe, not gates to slow them down. Your Friday evenings will thank you for it.